At GameChanger (GC), we recently decided to use Kafka as a core piece in our new data pipeline project. In order to take advantage of new technologies like Kafka, GC needs a clean and simple way to add new box types to our infrastructure. This is the first in a series of 2 blog posts I will be doing that explore this concept. (See Tom Leach’s post on how we switched our infrastructure to use docker for a more in depth background.)

In this post I will be covering the 5 steps needed to add a new box type to production at GC.

- Create a new AWS launch config

- Create a launch script that sets up a docker box.yml

- Create an image that will be the base of a docker container

- Scale new boxes with an autoscaling group

- Set up security groups

This post is done with an eye on getting a basic Kafka cluster set up in production, which will be discussed in the next post. Along the way I will give some insights into our infrastructure and our use of both AWS and Docker.

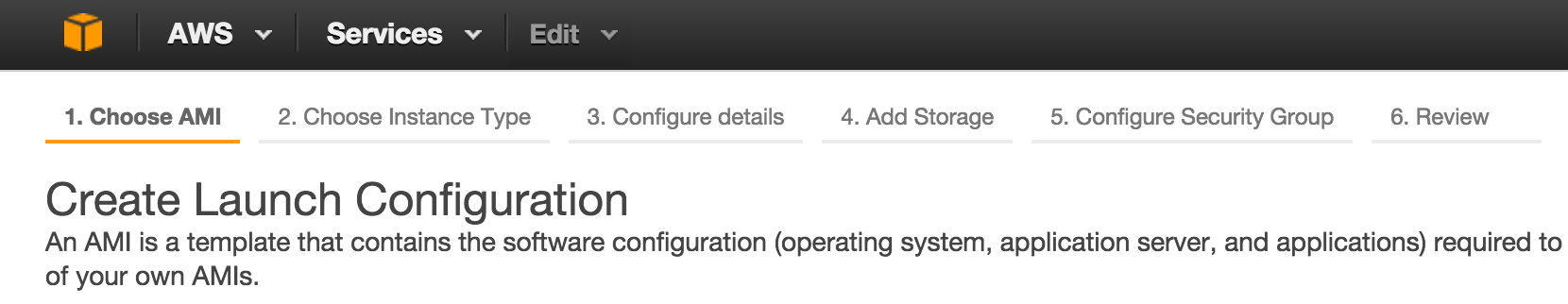

1. Create a new AWS launch config

At GC we rely heavily on AWS’ auto scaling feature. Therefore when you are creating a new box type, the first thing you need to create is a new Launch Configuration. Launch configs allow you to specify three things critical to GC’s box scaling: Amazon Machine Image (AMI), box type, and a script the box runs at startup (User Data). In our infrastructure we use a standard AMI for all of our boxes and choose a simple starting box for every new system (m3.large). The real meat of how GC scales boxes is in how we use the launch script.

2. Create a launch script that sets up a docker box.yml

The launch script is important for a few reasons. First, it sets up application specific data. Ie. the script on a web box sets up web specific info, the script on an api box sets up api specific info, etc… This script also needs to set up how containers should run on each box type. This is needed because most GC processes run in docker containers. Using this script, we wanted an easy way to specify both app specific and container specific configs. We settled on a format based off of docker compose’s docker-compose.yml file. The YML config looks like the following: (One quick note: we set this up pre EC2 Container Service (ECS), so our decisions might look different if we were doing this project today.)

services:

- service_name_1

- service_name_2

apps:

app_name:

depends:

- other_app_name

elb: name_of_elastic_load_balancer

image: image_to_base_container_off_of

containers:

container_name:

restart_on_failure: False

use_host_hostname: True_or_False

ports:

- ports_to_forward

logs:

- location_of_log_files

volumes:

- volumes_to_expose

envs:

- environment_variables_to_set

command: optional_command_to_run_in_containerThis config is very similar to the docker compose config, which brings up the question: Why did we not just use docker compose (DC) in production? One of the biggest reasons came from DC’s own docs:

Compose is great for development, testing, and staging environments, as well as CI workflows.

This statement strongly implies that DC should not be used in production. On top of this, there were also configuration needs that DC did not provide.

One of the most important features that DC did not provide was our need for a good way to specify Elastic Load Balancers (ELB). Knowing each box’s ELB allows us to deregister a box from its ELB before we deploy containers. It also allows us to add it back once a container is deployed. With this ability, we can ensure that no traffic will be routed to our boxes while containers are down or starting up. This allows us to maintain uptime while deploying. We enable this with the elb attribute.

At GC, we have a standard set of services that run on all of our boxes (rsyslog and cloudwatch statsd). These services, when specified, run with uniform configs. We wanted to cut down on copy and paste and the need to remember these configs. Using the services key, you can specify which of our services should be running on the box. The docker launch script will then take care of launching and configuring each one.

As at most companies, logs at GC are very valuable. Logs provide a wealth of information, so it is important to have them somewhere they are easily searchable. We use rsyslog to take all logs on a box and send them to our log tracking service. In order to do this, rsyslog looks in a special directory on the box /special/logs/directory. This means that all process logs should be forwarded to this location. For the sake of simplicity, accuracy, and future proofing, we did not want to specify a docker volume mount in each box.yml. Instead, we use the logs key to specify where the application’s logs are located in the container. The docker launch script will then ensure that the application’s log directories are forwarded to the proper host directory.

On top of those already mentioned, there are some other minor differences that contribute to why our yaml config is different than DC’s. These are mainly to facilitate convenience, speed, and ease when creating and launching new box types. As an example of an actual config, our web box.yml can be seen below:

services:

- rsyslog

- cloudwatch-statsd

apps:

mongos:

image: <gc_docker_registry>/mongos

containers:

mongos:

use_host_hostname: True

env:

gcenv: prod

ports:

<port1>: <port1>

logs:

mongos:

- /container_location/mongodb

gcweb:

depends:

- mongos

elb: web-production-vpc

image: <gc_docker_registry>/gcweb

containers:

gcweb:

ports:

<port2>: <port2>

env:

gcenv: prod

logs:

gcweb:

- /container_location/gcweb3. Create an image that will be the base of a docker container

Once you have configured your box.yml, you still need a docker image for the container to run off of. Luckily, docker makes it really easy to build new images using Dockerfiles. At GC, we make heavy use of Dockerfiles: we store all of our Dockerfiles in their respective app repos and store built and saved images in a private docker registry. We are also continually updating our docker images. A new image is built and pushed to our registry each time we commit and deploy a repo. Boxes then pull new docker images from our registry at container launch time. So when creating a new box type, all you need to do is create a new Dockerfile, use it to create an image, and push that image to our private registry.

You now know about our standard box config and our process for managing docker images, but you may still be wondering how containers are actually launched on our boxes. If docker compose is not doing it, what is reading the box.ymls and launching our containers? Since we have a custom box.yml, we made a custom python script (using docker-py) to launch our containers. This script is put in a recipe and is run by chef(on box launch) and serf(on deploy) on all boxes. A pseudocode version of the script can be seen below:

def get_apps_in_order(box_config):

for app_name, app_config in box_config.get('apps').iteritems():

dag.add_node(app_name, app_config, depends_on=app_config['depends'])

return dag.topological_sort()

def docker_deploy_app(app_config):

deregister_box_from_elb(app_config)

stop_existing_containers()

start_new_containers(app_config)

register_box_with_elb(app_config)

def deploy_now():

box_config = read_box_yaml()

ordered_deploying_apps = get_apps_in_order(box_config)

for app_config in ordered_deploying_apps:

get_lock_on_app()

docker_deploy_app(app_config)

release_lock_on_app()

ensure_services()The script follows a simple structure:

- Get a list of all apps. The apps are sorted by dependency. So if app1 depends on app2, app1 is later in the list.

- Grab the lock on the app. (We use locks to ensure only one box is deployed at a time)

- Deploy Containers:

- Remove the box from the ELB

- Deploy the new docker containers

- Place the box back in the ELB

- Ensure that requested services are running

4. Scale new boxes with an autoscaling group

Now that our boxes can launch their containers, we have just two steps left. The first is creating a new AWS Auto Scaling Group (ASG). Creating ASGs is done through the AWS Management Console, and I will go over the specifics in my next blog post.

ASGs are very useful. They allow you to specify a set of scaling policies on when to launch and shutdown boxes. At GC, we use CPU, memory, and custom cloudwatch metrics as triggers to automatically scale up and down our boxes. These scaling features are nice for production ready apps, but are not needed when initially setting up an ASG. Instead you can use ASGs to manually tell AWS to scale up boxes with your new box type.

5. Set up security groups

The final step to adding a new box type is setting up security groups. Security groups control IP and port access rights between boxes in your cluster and between boxes and the internet. They can be used to help organize your system and help isolate pieces to limit vulnerabilities. Like ASGs, security groups should be set up using the AWS management console. I will cover the specifics next time.

Thats it! Thats all there is to adding a new box type at GC. As you can probably tell from the post, steps 1, 4, and 5 use very standard AWS features. The real meat of adding a new box type is steps 2 and 3. These focus on specifying which containers should be running on our boxes, and what should be running in each container. Along with our custom python script, these steps are the backbone of launching boxes using docker in our infrastructure. We have continually optimized these steps to make adding new boxes and services easy. I will discuss the specifics of these 5 steps when adding our new Kafka cluster in my next post.