Introduction

GameChanger recently released an all new app, GC Team Manager. This wonderful new product helps youth sports communities communicate, coordinate, and organize their team’s lives. I have been lucky enough to be on the team that gets to build the backend for this new app. Throughout building out this completely new backend, I have gotten to work on a lot of different pieces of the system. The one I want to dive deeply into today is our sync system.

Sync is a pretty generic, buzzwordy programming term. Therefore, it is important for me to clarify what sync means in this context. In this article, sync is the processes of ensuring that all devices in our system have a shared, up to date view of the world. If one device sees the state of Team A one way, all other devices should see the exact same state for Team A. If a device makes a change to Team A, that change is reflected instantaneously across all other devices. Note that this is the ideal version of sync. There are many constraints and challenges that make this hard to achieve but this is the goal I set about building towards. The reason sync needed to work this way in our app is:

- Users expect it - Users have begun to expect near immediate updates to propagate across their and their friends’ devices.

- Avoids confusion between users - The closer to instantaneously that an app updates itself the less likely it is for two users to find divergences in their data. We want to avoid two coaches on the same team seeing different versions of that team’s data.

- Keeps reconciliation simple - The longer period of time where data can diverge, the more work it can be to reconcile changes into one object. We want to try and limit the potential for divergence and to limit the reconciliation complexity.

Having settled on what sync means, the rest of this article attempts to describe the design and architectural decisions and trade offs that went into solving the sync problem for our new Team Management app. There will be a follow up article that dives into the nitty gritty implementation of our sync system. But before I can fully describe the trade offs made in designing this system, I must describe the constraints of this system:

- Each app needs to operate independently - Each app must be able to create, read, update, and delete data without having to coordinate between other apps. Our apps should not solve this distributed system problem. This allows our app to remain quick and responsive and allows our app’s developers to spend more of their time building features users want to see. That means the solution, complexity, and implementation need to be built in the backend.

- Apps need to be fast - Sacrificing speed and usability of the app to make this sync system work is a non starter.

- The solution must handle network outages and off line usage - The parents and coaches that use our apps sometimes find themselves at sports fields with poor or no network coverage.

- The nearer to instantaneous the update, the better - See the ideal sync system described above.

- Do not drain the device’s battery - The fastest path to getting your app uninstalled is to kill the phone’s battery, so our solution needs to be as battery efficient as possible.

- Take an iterative approach and use the smallest amount of time possible - Since we are designing a totally new backend for this new app, there are tons of features that need to be built to deliver real value to our users. We cannot drop everything to make this system perfect and anything that allows us to build it iteratively is a big plus.

Given the constraints I talked about, I wanted to cover 4 broad alternatives we ran through when designing this system. For each architecture I will discuss the pros and cons of this system. At the end I will describe the architecture solution we went with for our sync system.

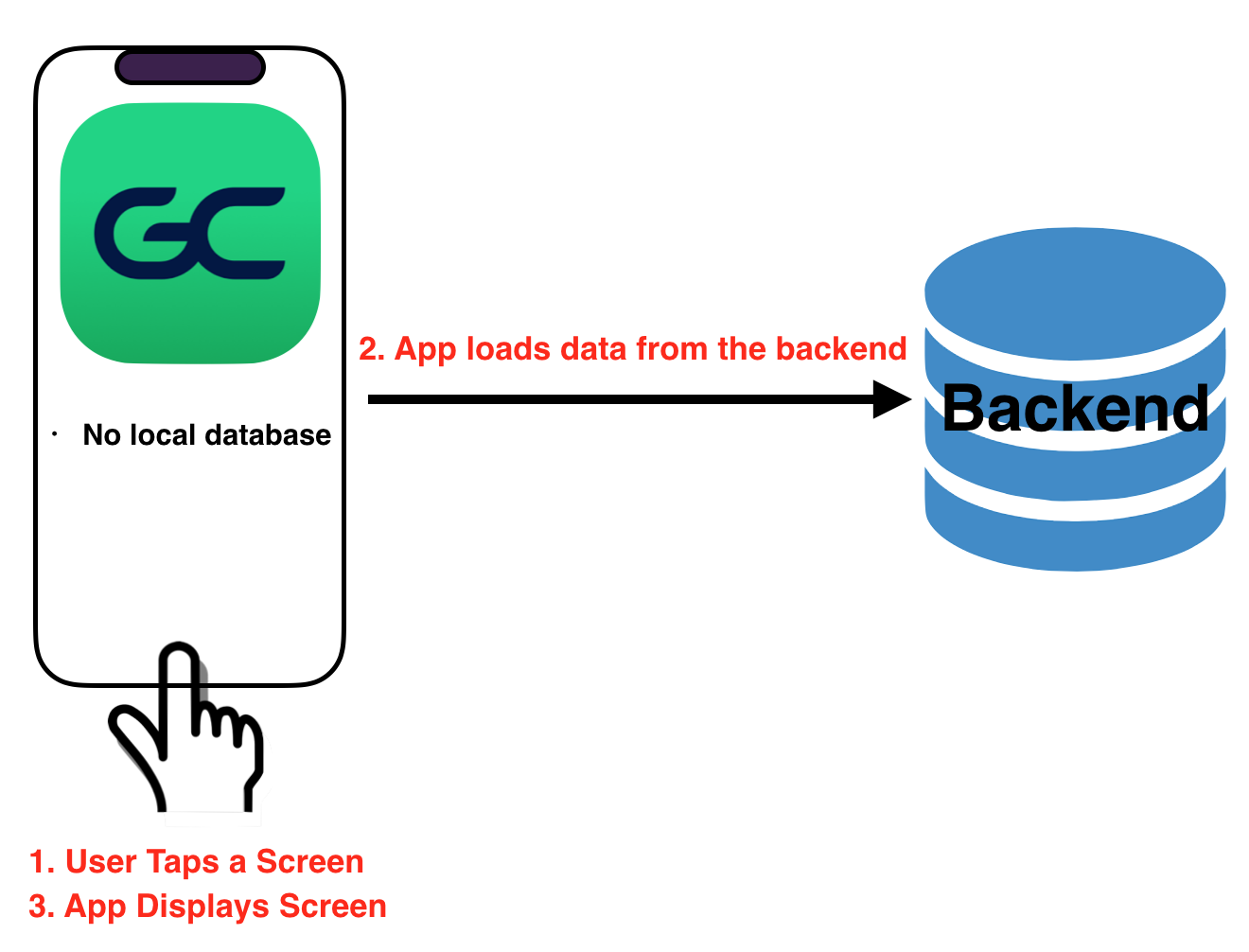

1. Just In Time Loading

The idea behind just in time load (JITL) is pretty simple. The app stores no data locally, i.e. no local database. When it needs to display a new screen on the app it goes to the backend and loads all data it needs. It then takes that data and displays it as needed on the device. On the next screen, the app simply rinses and repeats.

Positives:

- Simple - This approach is very simple from both a backend and a client perspective. Since we are using http as app’s sync protocol, it is incredibly lightweight.

- Data is up to date - Since the app is pulling data from the backend right as is need it, the users will see the most up to date version of the data. This meets the ideal of our sync system.

- No need to maintain a local database - This is strongly related to the point about simplicity, but not having to build and maintain a local database for the app is complex enough to merit its own data point.

Negatives:

- Slow - Network requests are some of the slowest calls anyone can make in computing. Cellular network requests take even more time due to data bandwidth constraints and latency. In this system the app has now delayed displaying all screens until it makes anywhere between 1 and N network requests. This will slow the app down to a crawl and make it nigh unusable.

- Only works online - This violates one of our constraints. If the app only display a screen after loading data from the network, it becomes unusable offline.

- This wastes phone battery - Using a devices network card has a high battery costs. The ideal way to use the network is to make a bunch of requests all at once and then leave the network off for a period of time. In this scenario we would constantly be turning the network card on and off, wasting battery.

- Data is not always up to date - If a user loads a screen on the app and then sits there for a period of time, the JITL system can lead to divergence. The data displayed can be changed by a different device in the background leading to divergence and confusion.

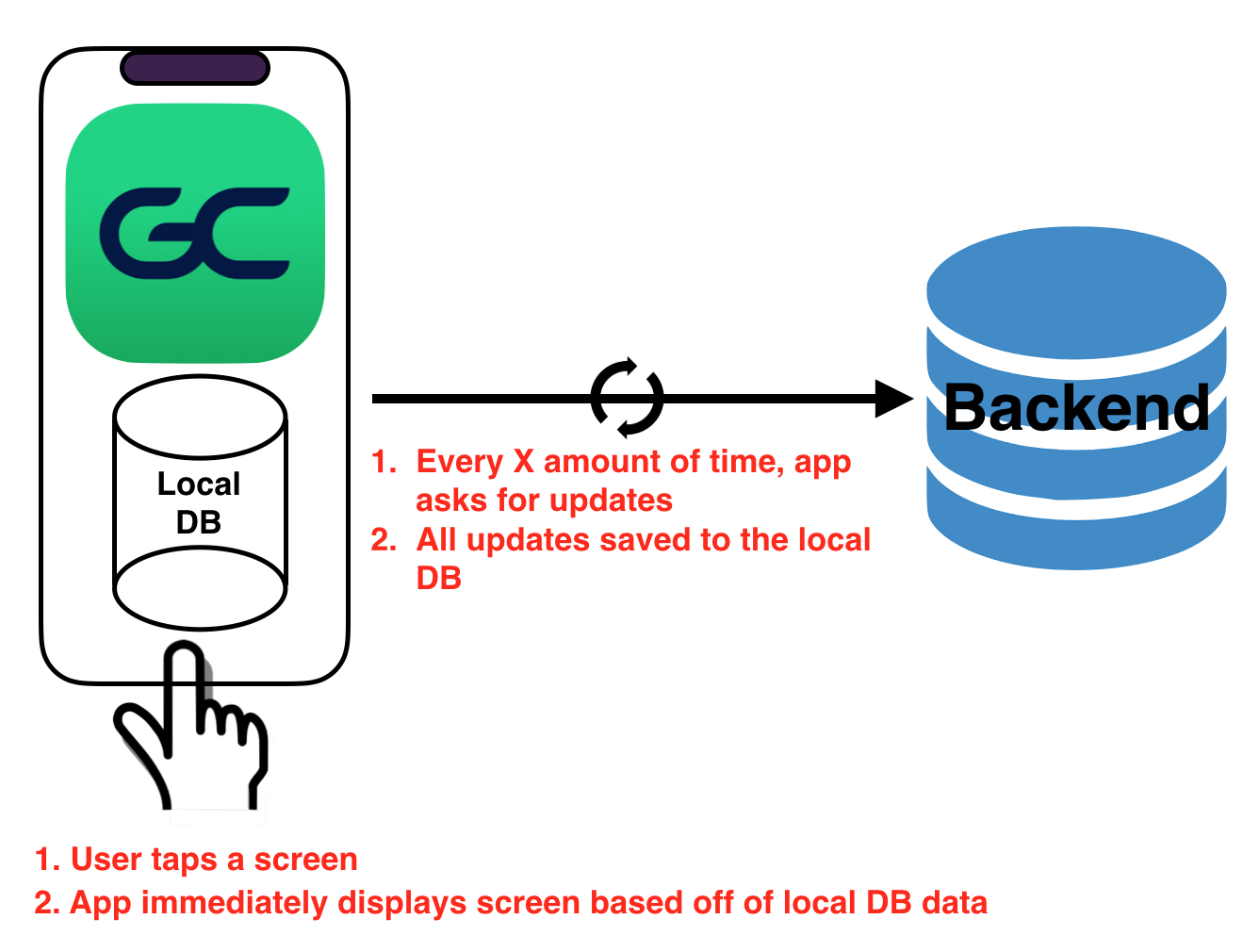

2. Asynchronous Polling

This Asynchronous Polling (AP) system is very different from JITL. First, this system involves a local database. This stores a version of all data the app needs. Every time the app wants to display a screen, it uses the data in its local database. Second, instead of loading data from the backend every time we display a screen, the app loads the data every X minutes. That data is then saved into the app’s local database.

Positives:

- Fast - The AP is a drag racer compared to JITL’s bicycle with square wheels. Replacing many network requests with local database reads makes this system fast and user friendly.

- The system now works offline - Another benefit of the local database is that the system works offline. When the app loses network connection, it just reads data from its local database and stops polling.

- More battery efficient - In the JITL system every time we loaded a screen we were spending battery life to use our network card. In this new system the app only uses the network card every X minutes. This is much more efficient.

Negatives:

- Introduced divergence and delay - One of the worst things about this solution is that we have moved further away from our ideal sync system. We now have up to an X minute delay between the time one app makes a change to the backend till all other devices have synced that change.

- Always runs every X minutes - Another downside of this AP solution is that the polling loop runs whether or not any data in the backend has actually changed. This has negative consequences for both the app and the backend .For the app that means that we could be wasting both CPU and battery life every X minutes for no updates. This can lead to the app being slower and more of a battery hog. From the backend’s perspective the consequences of this system are dramatic. For every device running our app, every X minutes we get a series of request to refresh each app’s local database. This ends up being a lot of requests and scales linearly with the number of devices. From past experience with our scorekeeping app, these polling sync requests can come to dominate all requests to our backend.

Open Questions:

- How does the app know what to reload? - Even with AP, the app still needs to determine what has changed and what data it should fetch. There is a spectrum of solutions to this problem. At one end, the app can reload all data currently in its local database. On the other end the device can identify itself to the backend and ask what all has changed since its last request. Because the app still does not know what has changed, the pure app solution still involves a lot of unneeded work. The pure backend solution involves a lot of complexity in order to track what each device cares about and what has changed since each device last synced. Ideally a balance is struck, where the backend has a good way to track changes and the app gives it some context on what it actually cares about. Generally though, no matter what our solution here, we will be adding complexity somewhere.

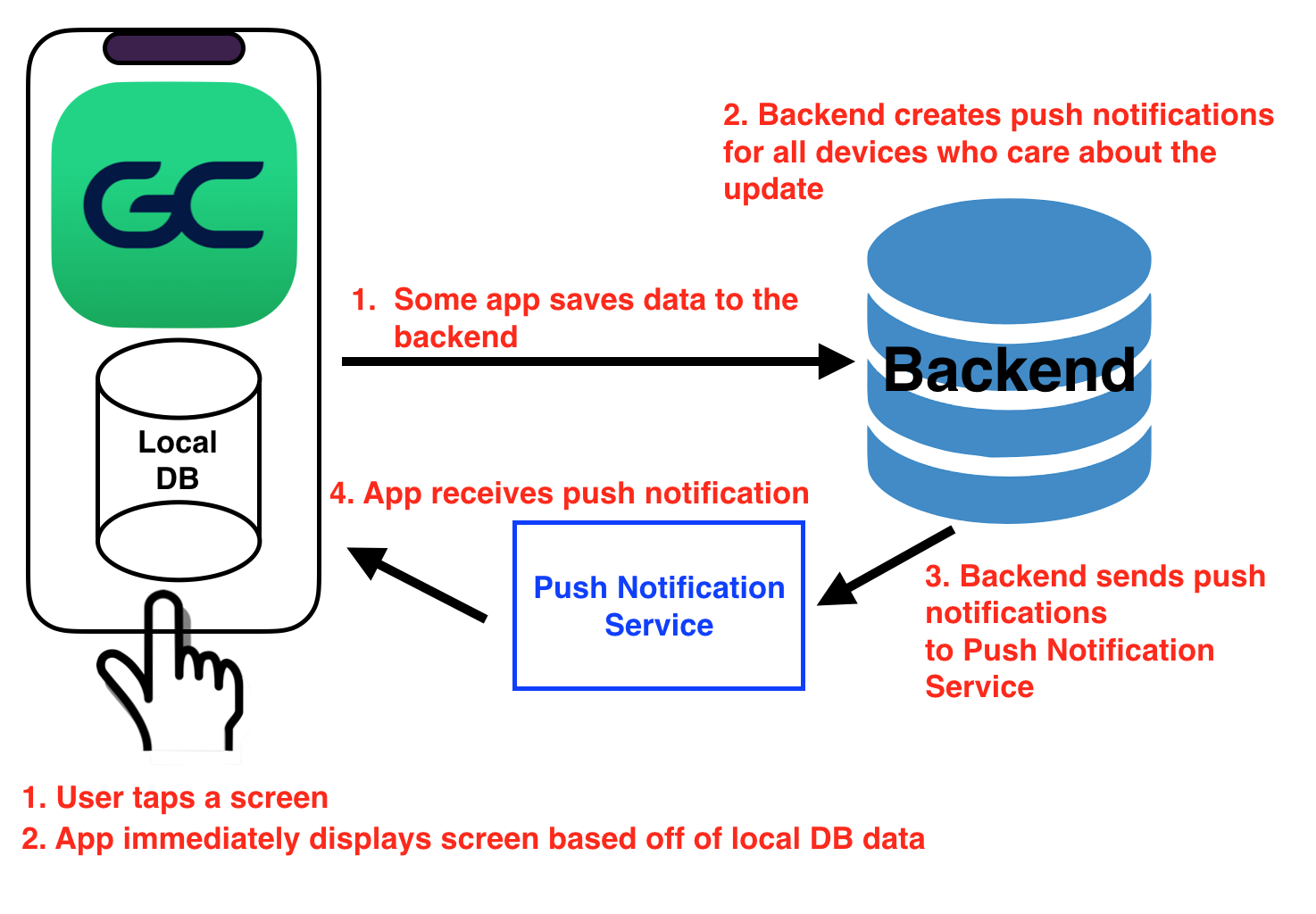

3. Targeted Push Notifications

Targeted Push Notifications (TPN) builds on the failures of the AP system. It keeps the app local database which confers the same speed and offline benefits as in the AP system. But now, when an app saves data to the backend, the backend is responsible for notifying all devices who care that something has changed. The backend does this by transforming all database updates into a set of updates per device and sending updates for those devices to a push notification service (something like APNs).

Positives:

- Near real time data consistency - Because push notifications are sent and received very quickly, we move much closer to the ideal of our sync system. Almost as soon as something changes, our devices should know about it and be able to reload.

- Much less load on the backend - Another benefit of dropping the polling loop is that every app no longer hits the server every X minutes. Each app only requests data when something has changed. This can drastically cut down on the total number of requests on our backend.

- More Battery Efficient - Instead of of the apps needing to poll every X minutes they now only use network when saving data or when a push notification informs them that something they care about has changed. This solution begins to reach the upper limit of batter efficiency.

Negatives:

- iOS push notifications are not guaranteed to be delivered - As the saying goes: “In theory, there is no difference between theory and practice. But, in practice, there is.” A huge detractor here is that iOS push notifications cannot be relied on to always be delivered. If we build a sync system that relies on devices getting an update, and that update is never received, the divergence in the system quickly becomes very large.

- There are no ordering guarantees - Because of how distributed systems work, when sending multiple updates to an app, there is no guarantee that the first update will be received first. This is true, even if iOS push notifications were guaranteed to be delivered. That does not mean we cannot use push notifications, but it does mean that our sync system will need to take this into account.

- Conversion of database save to device push notification is very complex - This solution falls on the all backend side of the reload question above. The backend is now forced to know about what data each device cares about in order to figure out which devices need to be informed on data save. This means that devices now need a way to signal to the backend that they care about a piece of data. This leads to much higher complexity, orchestration, and implementation time.

Open Questions:

- What level should updates be at? - Even if we had the infrastructure already built, could ensure push notification delivery, and ordering guarantees, the TPN system still has an open ended question. What level of updates do I send to the app. If an RSVP for person A for game X on a team 1 changes, do I tell the app that the RSVP changed, the game change, the team changed, or something else? The solution we choose has network and complexity implications for both the backend and the frontend.

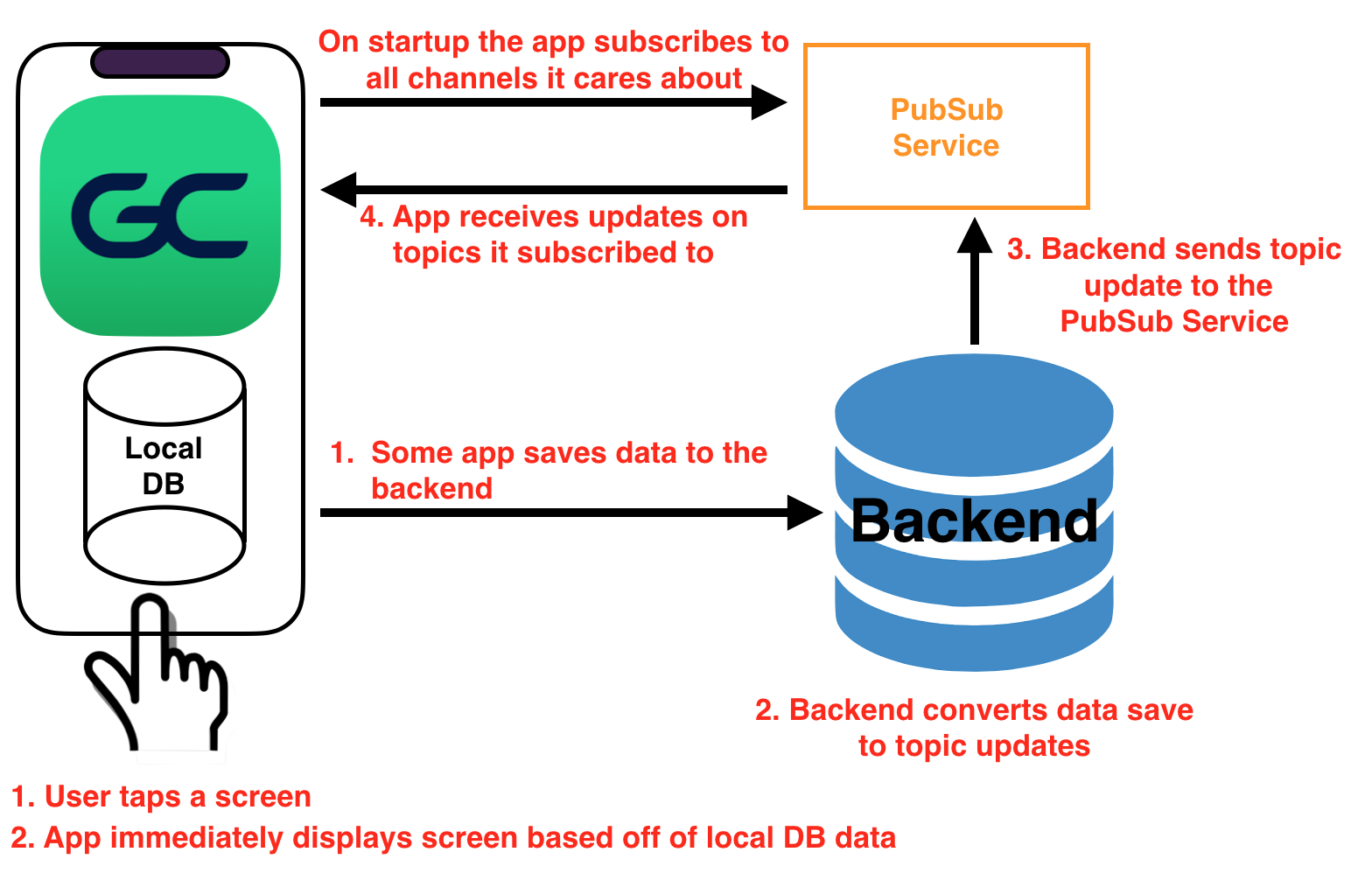

4. Pub/Sub

The Pub/Sub solution is very similar to the TPN system. Both have a local cache. Instead of transforming database updates into device updates, the Pub/Sub model converts them into a series of topic updates (In our case teams, persons, etc). These topic updates are sent to a Pub/Sub Service. At the same time each device subscribes to all of the topics they care about. Then, when a new topic update is pushed to the Pub/Sub Service, all devices subscribed to that topic get that update.

Positives:

- Near real time data consistency - see TPN positives

- Much less load on the backend - see TPN positives

- Much stronger delivery guarantees - Because we are no longer constrained to using push notifications, we have much more solid guarantees around delivery.

- Converting a backend change to a topic change is relatively simple - The topic updates used in this system are much easier to derive than device updates used in the TPN. All the backend needs to know are business rules mapping backend updates to topic updates. There is no longer a need to coordinate with each device to figure out what it cares about.

Negatives:

- No ordering guarantees - See TPN negatives

- Worse battery life - Since we are no longer using efficient push notifications we will have to start using more network requests to keep the device up to date with the Pub/Sub service. This might be more efficient then the polling loop described above, depending on the level written at, but is still less efficient then push notifications.

Open Questions:

What level should updates be at? - see TPN open questions

Conclusion

So I have discussed four different solutions with different trade offs we could use to build our sync system. Which ones did we go with and why? The final implementation of our system is very much based off of the Pub/Sub system described. On top of that we needed to add a way to handle out of order delivery guarantees and wanted to send targeted updates. The reasons we chose this implementation are:

- Pub/Sub provides near ideal sync. This allows us to meet users expectations and puts us on a footing to compete with any app out there.

- We were willing to sacrifice some battery life in order to get stronger delivery guarantees. These stronger guarantees can allow the system to be much more reliable and much simpler from the app’s perspective.

- Topic updates are much simpler than device updates and allow this system to be built much more quickly.

- We were able to map out a simple iterative development path starting with AP and evolving into Pub/Sub that allow this system to be built and tested in pieces.

If you want to learn more about the specific implementation this solution, check out my follow up blog post.