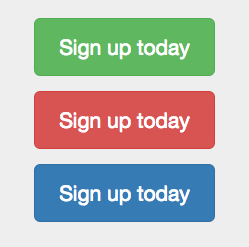

A few weeks ago, during a GameChanger Hack Day, my fellow data engineer Zach Markin and I prototyped a multi-armed bandit micro service. As a motivating example, consider the problem of deciding between these three buttons on some page of a website:

There are many ways to tackle this problem:

- Pick what you think looks best.

- Pick what you think will perform best.

- Run a test to statistically measure which performs best, and pick it.

If the thing being chosen (a “Sign up today” button in our example) is tied to an important part of your site (signups usually are,) then it is likely worth testing. Traditionally, a test is run by splitting people into test groups (and a control group if there is an existing choice,) running the test until a certain number of people have seen all of the test options, and then making a decision based off a statistical hypothesis test.

A multi-armed bandit algorithm works a bit differently. Instead of having a step where the test is running and a step where we make a decision, a multi-armed bandit is always testing and always choosing. The better a choice performs, the more often it is displayed. For example, if green gets a higher click rate, it gets displayed more. The most important advantage here is that the multi-armed bandit starts to favor the higher performing option quickly so that money isn’t lost on a poorly performing option for the entire duration of a test.

To calculate just how often each color should be displayed, I wanted to find a distribution describing the probability of each color performing best. I took a Bayesian approach, based off Sergey Feldman’s and Evan Miller’s excellent blog posts on the subject. To see what I ended up doing, skip on down to the solution, but first, I’ll briefly speak about why I used a Bayesian model.

Why Bayes?

Recently at GameChanger, we’ve increased our focus on measuring the impact of our product and design changes on our customers’ experiences. We’ve been using KissMetrics, and specifically their A-B testing tool which uses a normal approximation to calculate certainty of results. This is an efficient approximation of the Binomial distribution, which describes the probability of seeing k successes from n trials. In our case, a user landing on a page with a test is a trial; a user performing some desired interaction on the page is a success. The certainty displayed, however, comes with a few caveats.

Statistical Significance and Hypothesis Testing

As mentioned above, hypothesis testing is the traditional methodology for running a statistical test. This approach is often called the frequentist approach, and it assumes that there is some true value for the variable being measured which we are attempting to discover through our test. In our case, we measure the success rates for each of the colors of the button. We test each option, and assuming their rates are different, we can measure the probability that their true values are actually different or if the difference observed is the result random chance.

This requires us to choose a somewhat arbitrary level of significance (typically “95% confidence”) before we run our tests that will determine if the results are significant or not. We also have to be aware of the risk of committing type I and type II errors. (A type I error is incorrectly rejecting a true null hypothesis, and a type II error is the failure to correctly reject a false null hypothesis. Wikipedia expands much more on this.) This complexity is not a flaw in a frequentist approach, in fact it is part of its power. A statistical significance test typically ends with a statement that some initial hypothesis is either true or false (or inconclusive) with a given probability of being correct. This complexity, however, does not easily translate to appropriate business decisions. But there is another approach.

Bayesian Testing

Bayesian statistics is built from a different view of the world altogether. The effect that we are attempting to measure (still a success rate) does not in fact have a true value but instead a probability distribution that describes its potential values. A Bayesian statistician makes use of a prior distribution from which they have reason to believe represents the distribution of the value being measured, and then updates that from the data observed. The choice of the prior is the black art of Bayesian statistics, much like the arbitrary level of significance chosen in significance testing. However, it is easy to choose a prior which will be overwhelmed by our experimental data.

The difference here is a bit subtle, particularly if you don’t spend your days heads down in a statistics text book. (Something I’ve been guilty of.) A hypothesis is designed from the start to reach a decision at a certain level of significance. For example, in Experimental Physics, discoveries are typically required to show statistical significance of 5 sigma, or 99.99997% certainty (on two different devices) to be declared “True”. In our example, we’d likely choose 95% certainty, run the test, and decide that the blue button (for example) is the best and move on (given the caveats discussed above).

The Bayesian approach does not provide such a statement of truth. Instead, it provides a probability distribution that describes the value we are measuring. In our example, we might be able to make the statement that “there is an 88% chance that blue is best” or “there is 65% chance that blue is at least 10% better than red and green”. Additionally, the distribution is important for the multi-armed bandit algorithm; I was looking to find the individual probabilities for each color that it performs best, given the data observed.

Generally, the Bayesian approach is much easier to understand and communicate. Feldman’s post mentioned earlier provides a helpful example:

Which of these two statements is more appealing:

(1) “We rejected the null hypothesis that A=B with a p-value of 0.043.”

(2) “There is an 85% chance that A has a 5% lift over B .”

Bayesian modeling can answer questions like (2) directly.

From my experience, I tend to agree with Feldman’s analysis that questions like this second one are much easier to explain and to convert into specific and practical business advice. The hypothesis test gives a “decision” with reasonably difficult assumptions baked in whereas the Bayesian analysis gives useful information which can inform a decision with very few assumptions baked in.

Now onto how I went about finding this probability distribution.

The Solution

I started with Miller’s post (mentioned earlier), which amazingly has a closed form solution for a Bayesian A/B Test. The issue, however, is that I was aiming for an A/B/C/… test which has an arbitrary number of options. Simply formulating this isn’t an easy task, and I’m fairly convinced that a closed form solution to this does not exist. (I’d love to be proven wrong! If you’ve solved this or have read a paper that does, please shoot me an email [and come work with us!])

Luckily, Bayesian models can easily be simulated, and in fact that is the approach taken in Feldman’s post. In Python (with numpy installed) we can write a function to compare two tests:

from numpy import mean

from numpy.random import beta

def prob_a_beats_b(a, b):

# a and b each tuples: (success_count, trials_count)

a_samples = beta(a[0]+1, a[1]-a[0]+1, 10000)

b_samples = beta(b[0]+1, b[1]-b[0]+1, 10000)

return mean(a_samples > b_samples)Here we are drawing 10000 random samples from a Beta distribution updated with the observed successes and failures. Then we simply pair these samples up in order and count how often a_sample > b_sample. For example if our red button was clicked 500 out of 1,000 times, and our blue button was clicked 4,800 out of 10,000 times, we see:

In: prob_a_beats_b((500,1000), (4800, 10000))

Out: 0.88641999999999999We can see that 50% > 48% easily, but the second option has much more data, so we are not entirely sure that the first option is the best. If we compare this to the closed form solution in Miller’s post, it’s quite close. In this specific case we get 0.88631554438158755.

Now we want to push this further and compare a specific test to all the rest. None of the approaches above do this directly, but it’s fairly straight forward to expand on the prob_a_beats_b function from above:

from numpy import mean, maximum

from numpy.random import beta

def prob_a_beats_all(a, *args):

# a and each other arg is a tuple: (success_count, trials_count)

a_samples = beta(a[0]+1, a[1]-a[0]+1, 10000)

others = [beta(other[0]+1, other[1]-other[0]+1, 10000)

for other in args]

max_others = reduce(maximum, others)

return mean(a_samples > max_others)Here we’ve done almost the exact same thing as the first example, except that we are comparing against multiple other options. We sample for all of them, and then take the maximum for each one. We do the same pairing, and then measure how often the a_sample > max(other_samples). Expanding our example, we include a newly added green button which was clicked 12 out of only 30 times:

In: prob_a_beats_all((500,1000), (4800, 10000), (12, 30))

Out: 0.76488We now need one more function so that we can compute all of these probabilities and get the full distribution:

from numpy import mean, maximum

from numpy.random import beta

def prob_vector(selections):

# dict of {selection_id: (success_count, trials_count)}

weights = {

k: prob_a_beas_all(

v, *[other_v for other_k, other_v in selections.items()

if k != other_k]

) for k,v in selctions.items()

}

return weightsThis gets us very close. For our example:

In: prob_vector({'red': (500, 1000), 'blue': (4800, 10000), 'green': (12, 30)})

Out: {'red': 0.76766000000000001, 'blue': 0.091800000000000007, 'green': 0.14032}You may be surprised to notice that the probability of “green is best” is greater than blue’s. Before we address that, though, note that because this process is based off random sampling, the sum of all these probabilities is not exactly 1.0. (In this case it is 0.99978.) To deal with this, we’ll write one more function:

def normalized_prob_vector(selctions):

# dict of {selection_id: (success_count, trials_count)}

weights = prob_vector(selections)

total = sum(weights.values())

return {k: v/total for k,v in weights.items()}The output for our example now is:

In: normalized_prob_vector({'red': (500, 1000), 'blue': (4800, 10000), 'green': (12, 30)})

Out: {'red': 0.7664006736707033, 'blue': 0.091907931670542953, 'green': 0.14169139465875372}Awesome! Now, back to the value for green being greater than blue. If we measure the probability that blue > green, we get about 0.8, so we should not interpret this result that green is better than blue. Instead, because we are measuring the chance that each color is the best, we are pretty certain that red is the best and even more certain that red is better than blue. However, we don’t have nearly as much information about green, and as such there is a larger chance that as more information comes in, it may end up being best.

Final Thoughts

There is plenty of debate over Bayesian v Frequentist approaches to statistical analysis and modeling. Ultimately though, your choice depends mostly on the context of the problem. This problem in particular was well suited for a Bayesian approach, and the ability to get results through a simulation make it easy to get to an actionable result quickly. Unfortunately though, like most things, there is no silver bullet and you will want to spend some time understanding your problems and the cost and benefits of different statistical approaches.

!['Detector! What would the Bayesian statistician say if I asked him whether the--' [roll] 'I AM A NEUTRINO DETECTOR, NOT A LABYRINTH GUARD. SERIOUSLY, DID YOUR BRAIN FALL OUT?' [roll] '... yes.'](/images/bayesian-statistics-and-multi-armed-bandits/frequentists_vs_bayesians.png)