Introduction

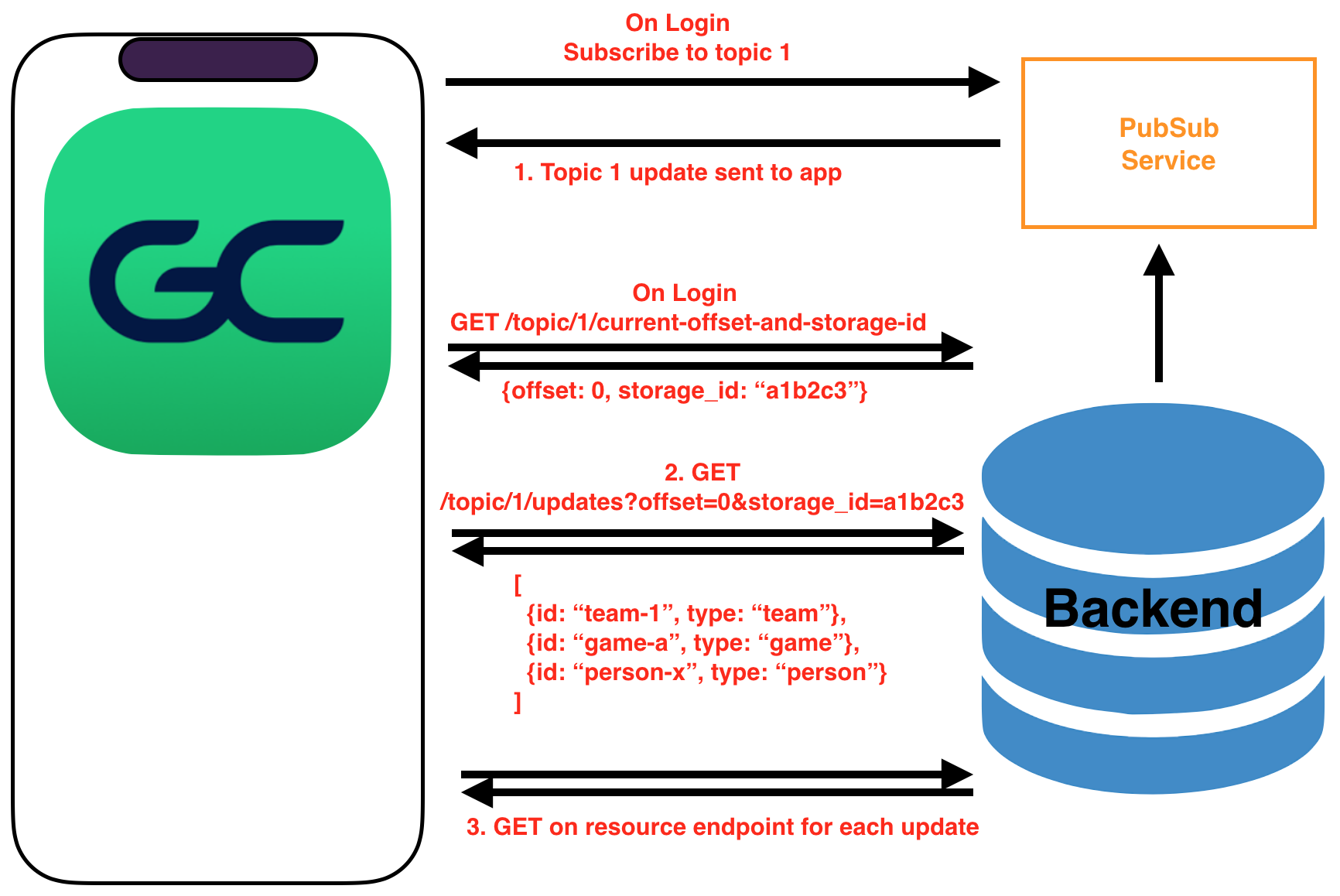

In my last article I talked about the sync system for our new GC Team Manager app and the trade-offs we considered in our design process. To reiterate, the high-level structure we settled on was a backend Pub/Sub service, with small granular updates, and we needed to account for the lack of ordering in message delivery. In this article I will cover how we implemented and made this sync system work.

I mentioned in the last post that part of the reason we settled on Pub/Sub was because we could use an iterative approach instead of all or nothing. This iteration broke down into two distinct parts, Asynchronous Polling Sync and Pub/Sub Sync. Asynchronous Polling Sync relied on Asynchronous Polling and topic updates. Pub/Sub Sync built device notification on top of Pub/Sub Sync. I will describe each in turn.

Asynchronous Polling Sync

The goal of Asynchronous Polling Sync was to solve a few distinct problems. First, it focused on building an algorithm that was resilient to out-of-order messages received by the devices. Second, it focused on turning backend database updates into targeted sync topic updates. To simplify the initial build, Asynchronous Polling Sync specifically avoided solving the problem of pushing topic updates to the devices themselves. It did this by allowing the devices to use Asynchronous Polling to get all updates. Switching from Asynchronous Polling to direct topic updates is the provenance of Pub/Sub Sync. I will cover each part of Asynchronous Polling Sync.

Asynchronous Polling Sync Algorithm

Handling Out of Order Messages

When thinking about the out-of-order messaging problem, the goal is to ensure that no matter what order message are received in, all devices will end up in a consistent state. When we were thinking through possible ways to do this in our sync system, we realized that the type of message being sent can help you solve this problem. As a demonstration I will consider three types of updates the backend could send to the app: send the data values of the updates, send the type of data change(update, delete, etc), or just send the id of the thing that changed.

Scenario 1: Team 1 is created then updated. All apps receive updates in the correct order

- Specific Updates: 1.

{id: <team_1>, type: 'team', updates: 'created'}2.{id: <team_1>, type: 'team', updates: {name: 'Cool New Name'}} - Type of Updates: 1.

{id: <team_1>, type: 'team', change_type: 'created'}2.{id: <team_1>, type: 'team', change_type: 'updated'} - Id and type updates: 1.

{id: <team_1>, type: 'team'}2{id: <team_1>, type: 'team'}

Scenario 2: Team 1 is created then updated. All apps receive updates in reverse order

- Specific Updates: 1.

{id: <team_1>, type: 'team', updates: {name: 'Cool New Name'}}2.{id: <team_1>, type: 'team', updates: 'created'} - Type of Updates: 1.

{id: <team_1>, type: 'team', change_type: 'updated'}2.{id: <team_1>, type: 'team', change_type: 'created'} - Id and type updates: 1.

{id: <team_1>, type: 'team'}2{id: <team_1>, type: 'team'}

As you can see, all update types work when messages are received in order. But as soon as messages start to be handled out-of-order, sending the id and the type of update is the only method that cannot lead to incorrect app side data. All the device has to do when it receives an update is to go to the respective API resource endpoint and load the current data. This method will always lead to the app having the most up to date data. (Note that the type of objects sent in our updates map nicely to the resources of in our API.) This simple update then reload algorithm lets our sync system be robust to out-of-order messages.

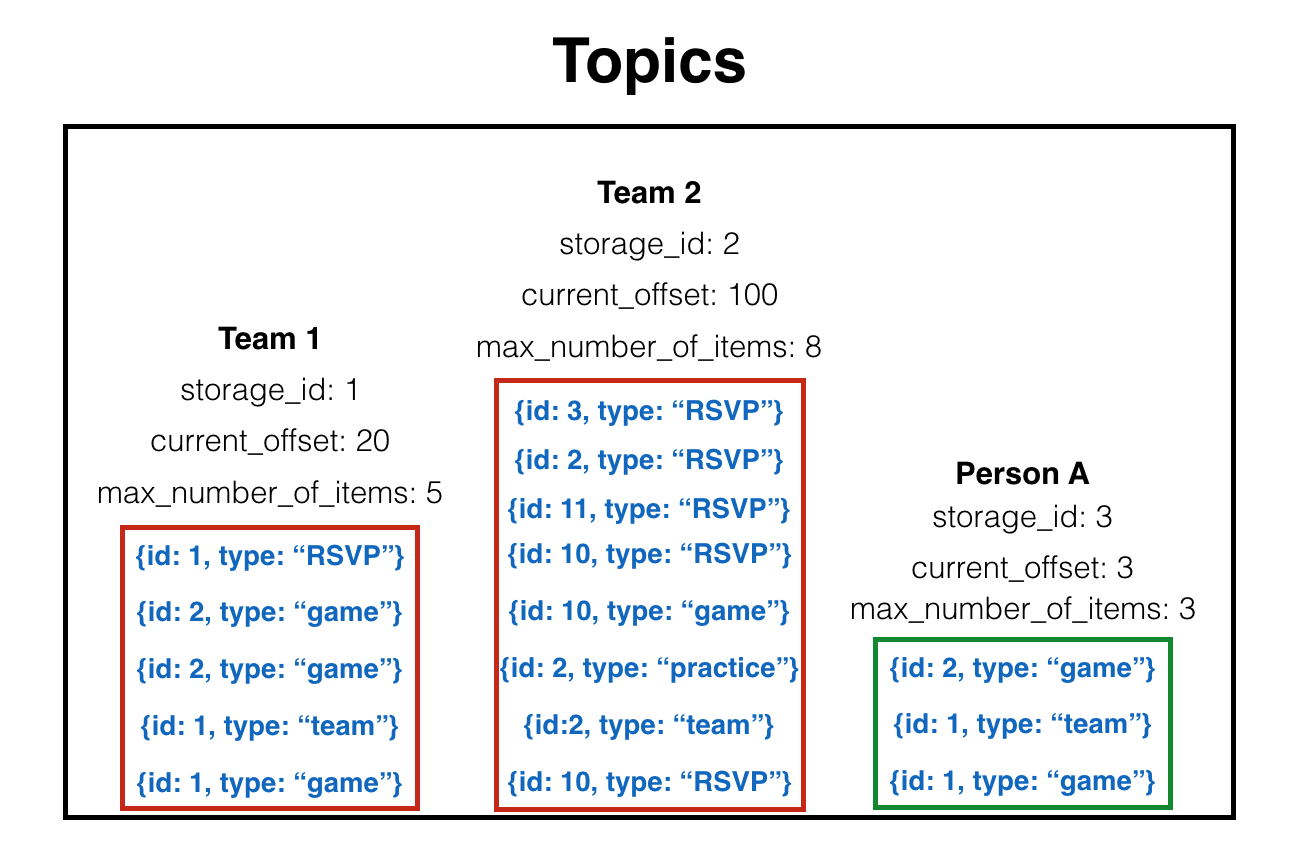

Topics

We now have a type of update that we can send that allows the system to survive out-of-order messages. But specific updates are just part of the solution. I also need to discuss the topics themselves and how they are structured.

- We have a few high level topic types. These were chosen based off of our resource model. Any other resource updates are put in those high level topics.

- Each topic contains a list of update objects described above

- Each topic also has three other important values

current_offset- This number can be used to help figure out what updates each device has already seen. It is a number indicating the most recent update sent to the topic. Each new update pushed into the topic increments thecurrent_offset.max_number_of_items- This number indicates the max number of updates to store for this topic. We structure our topics to only store a certain number of updates depending on the type of topic.storage_id- If we change the storage database or reset the updates, thestorage_idallows us to communicate that effectively to the app. As a simplification, this value indicates the version of the topic you are looking at.

Algorithm

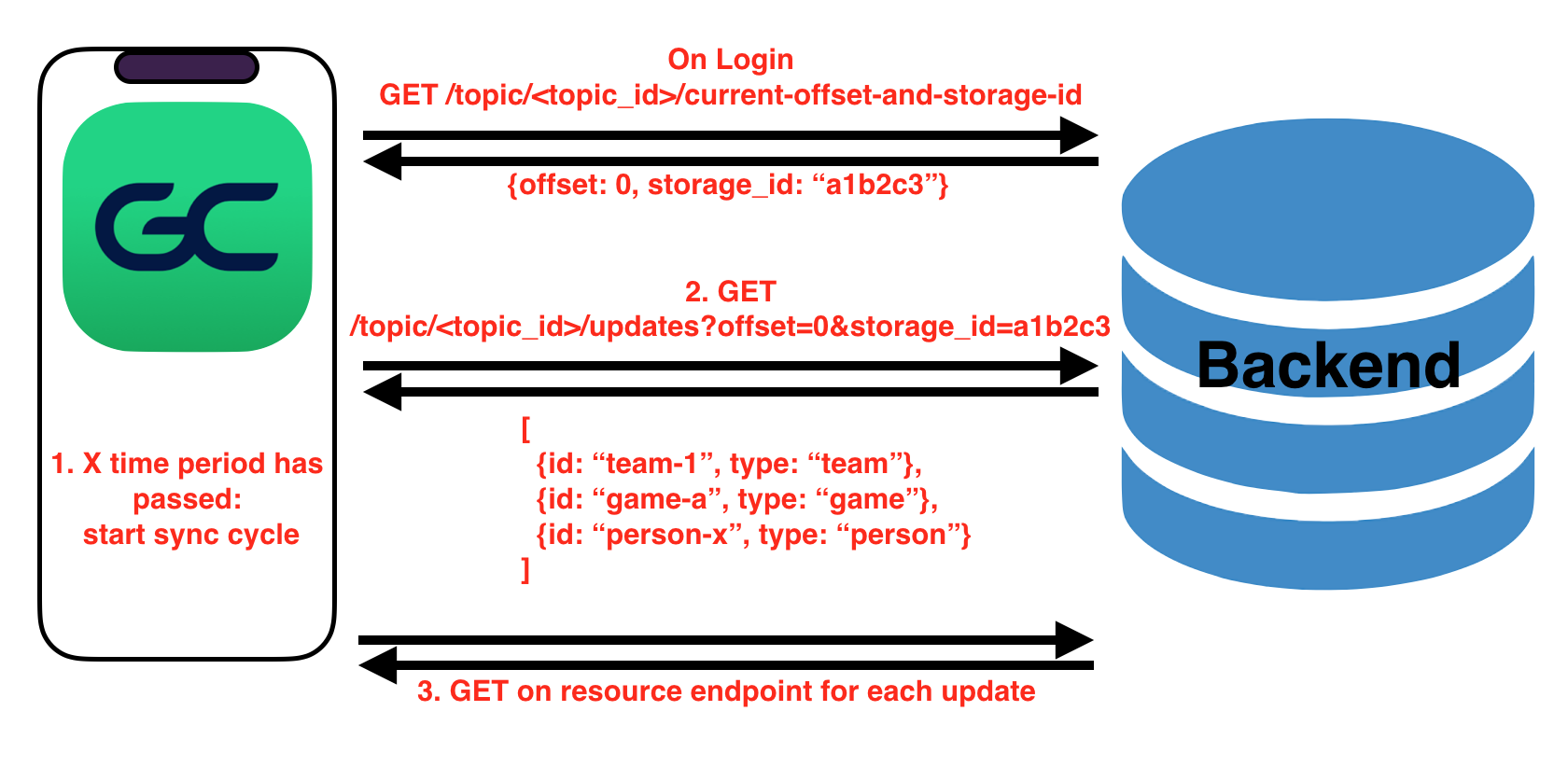

Given the structures above we use the following algorithm in Asynchronous Polling Sync:

- When the app initially starts up, it gets the

current_offsetandstorage_idfor all topics it cares about. (This same procedure happens when it decides it cares about a new topic). - When the app reopens and/or every X minutes, the app asks for all updates on all topics it cares about and sends down the

current_offsetandstorage_id- If the

storage_idsent does not match that found in the backend, a specific error is returned. If the app gets that error it knows it needs to do a full resync of that topic - If the

current_offsetsent <current_offsetin the backend -max_number_of_itemsa specific error is returned. If the app gets that error it knows to do a full resync of that topic. - If both

storage_idandcurrent_offsetare valid, the backend returns back a list of all updates whose offsets are between the app’scurrent_offsetandcurrent_offsetin the backend.

- If the

- For each update the app receives back it attempts to reload the the resource described in the update from the API.

GET /persons/<person_id>,GET /games/<game_id>, etc. - When it has successfully reloaded all resources mentioned in the updates, the app updates the

current_offsetfor the topic.

This algorithm and the structures described make it very easy for the app to get updates it cares about, every X minutes, without having to worry about out-of-order messages.

Database updates -> Sync topic updates

Where to store Updates

Now that we have a robust app sync algorithm, we still need a way to start pushing updates into the topics when someone alters an API resource. Before we can decide how to transform backend updates to sync topic updates, we first need to figure out where to store our updates. What type of database should we use? The main database for our backend is Postgres. But there were a few reasons we were hesitant to just stick our updates in Postgres:

- We did not want the large number of sync updates to swamp out our responsiveness to actual API calls

- Making a dynamic number of topics to hold updates + the three variables each topic needs, ends up being pretty hard to do in Postgres.

- Contention on the topic updates table could end up very high which could slowdown all Postgres operations.

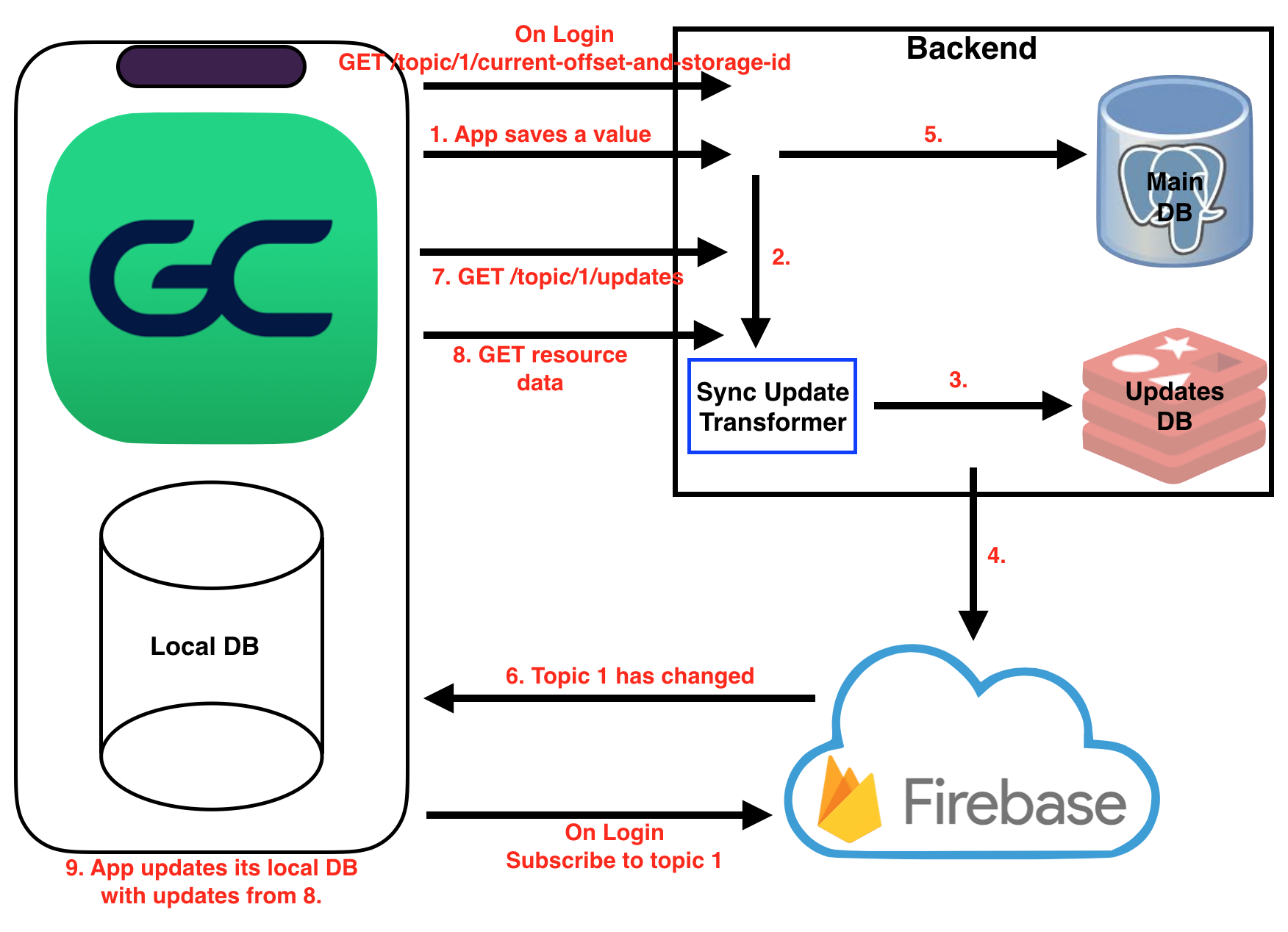

We needed a system which is super fast, has great list support, provides transactions, and is simple to get up and running. For these reasons we decided to store all of our updates in Redis.

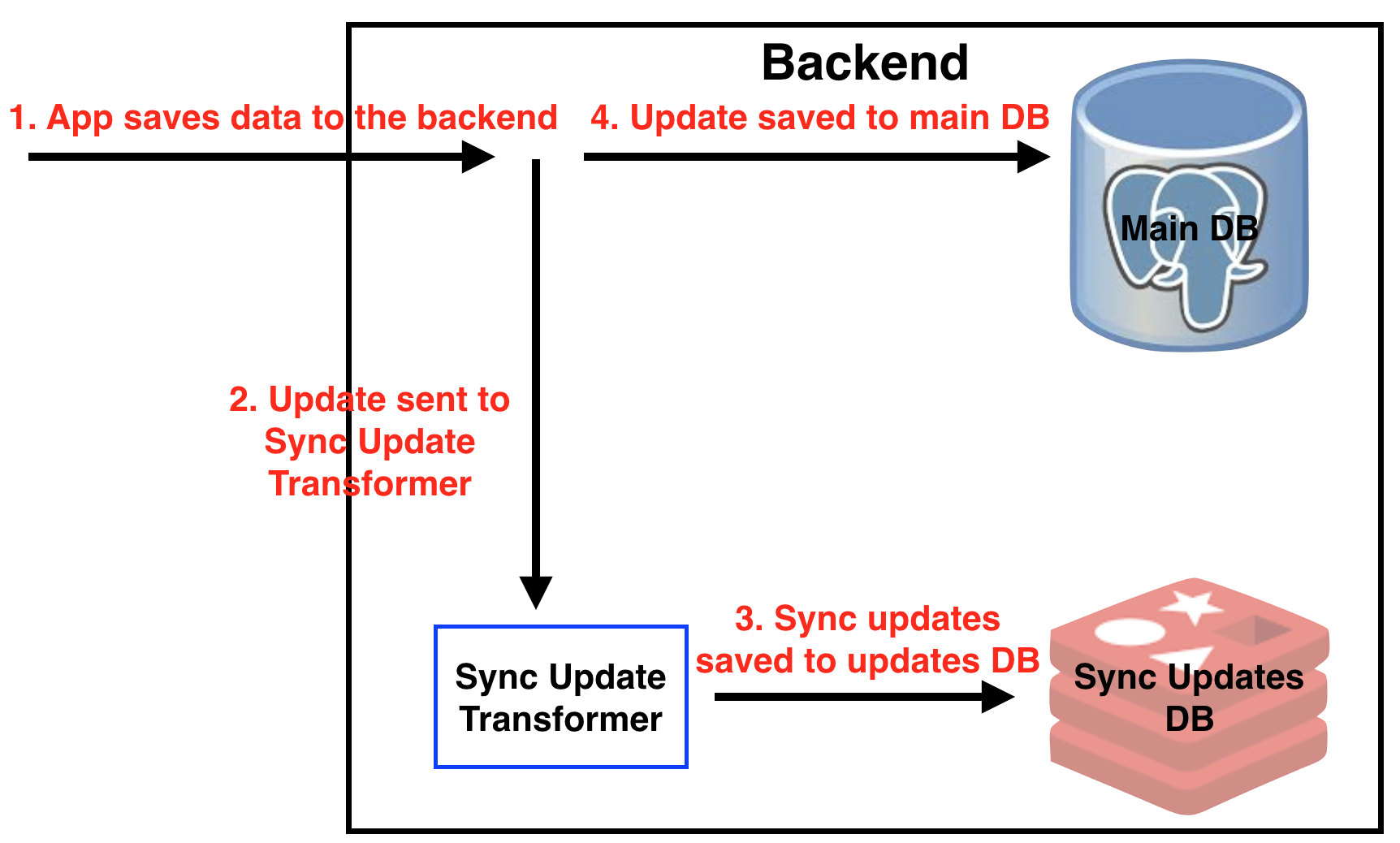

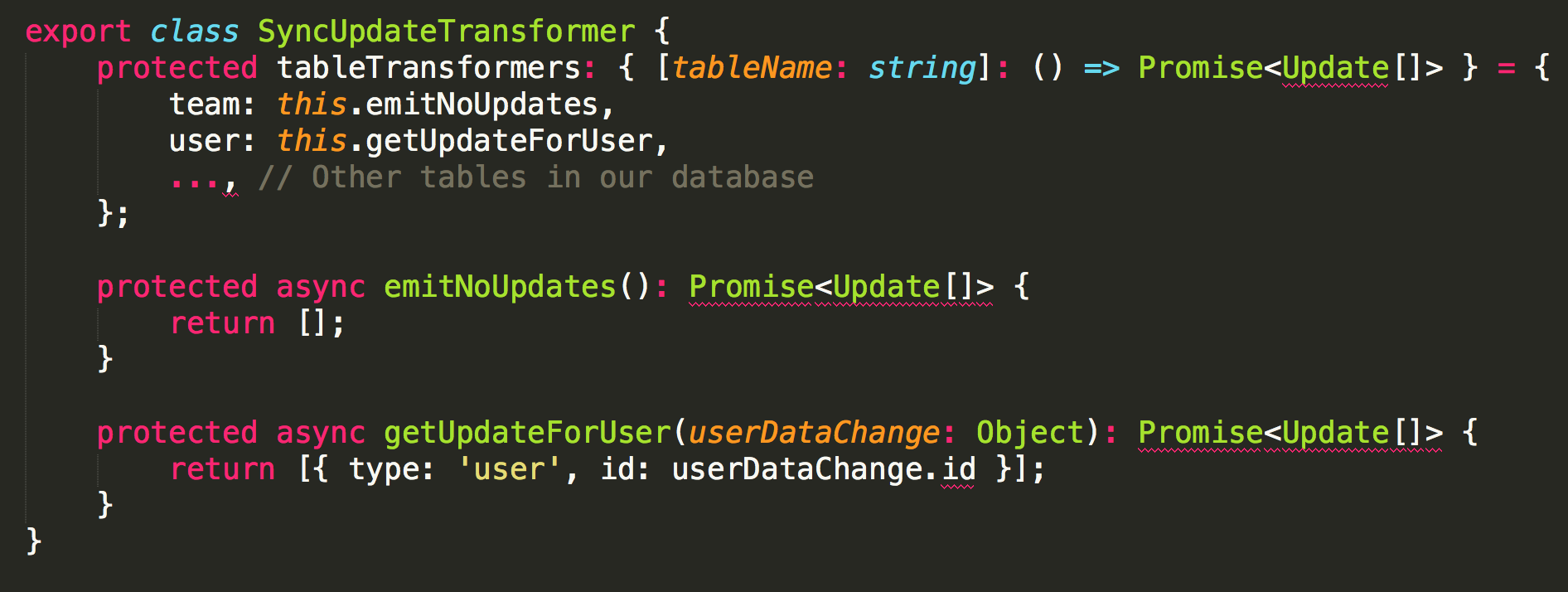

Transformation

So we now have the place to save our updates, but we still need a way to translate the Postgres database update to sync topic updates. We need some sort of transformer like in the picture above. This transformation actually ends up being very easy. We have a 300 line class which takes in query and update objects for each Postgres write and transforms those into a list of topic updates. The topic updates are then saved to Redis and then the main database updates are saved to Postgres. A simplified version of our transformer class can be seen below.

After we had built the features and functionality described above, Asynchronous Polling Sync was finished. That left us the time to prioritize and build Pub/Sub Sync when it became appropriate.

Pub/Sub Sync

The main difference between Asynchronous Polling Sync and Pub/Sub Sync is that Pub/Sub Sync replaces the Asynchronous Polling loop with a Pub/Sub service. All other parts of the system remain the same. This allows the devices to receive updates near instantaneously. Once again there are two pieces to consider when we built out Pub/Sub Sync, the algorithm to use for sending topic updates to the devices and how to actually build the Pub/Sub service.

Pub/Sub Algorithm

Similar to Asynchronous Polling Sync, there are many different algorithms we could use when pushing updates to the Pub/Sub service. Originally we just planned to send the full sync updates to the Pub/Sub service. This strategy ended up being suboptimal because there are times when Pub/Sub messages are dropped and not sent to the devices they were supposed to. Sending our full topic updates meant our algorithms depended on all devices receiving all sync updates. When this turned out not to be true we would then require a lot of extra complexity to handle dropped messages. Instead, we decided to just send a blank update that indicates something has changed on the topic: {changed}. When a device receives this message, it attempts to load all updates in that topic. For each update it loads, it GETs the proper resource from our API. This algorithm adds an extra step and network call, but keeps the overall algorithm very simple.

How to send Pub/Sub system updates

We now have an algorithm we want to implement to finish up our sync system. How do we build out this Pub/Sub service to send updates? Lucky for us, after some research and thought, we were able to find a prebuilt system that could serve as our Pub/Sub service: Google’s Firebase Cloud Messaging. (Specifically FCM’s topic feature). This free system allows devices to subscribe to topics and will make sure messages sent to those topics are delivered to those devices. The system also has a bunch of nice features: storing a certain number of messages per device if an app cannot immediately be reached, a very long TTL for messages, a pretty high per topic rate limit, and seemingly no overall rate limit. This system was also very easy to integrate with from both the client and backend side. We pretty much just dropped in FCM as our Pub/Sub Service and with that our sync system was finished.

Conclusion

We have come to the end of our discussion of our new sync system. We started out with an ideal sync system and some constraints on what our system needed to be able to do. We covered the trade-offs and criteria we considered when designing our system. Finally I covered all the technologies, algorithms, and systems needed to implement a sync system incredibly close to our ideal. The full flow implementation of our system can be seen above.